Creators & Designers

Generate and transform media with AI.

- Use Flux, Qwen Image, and custom models

- Generate variations

- Build reusable workflows

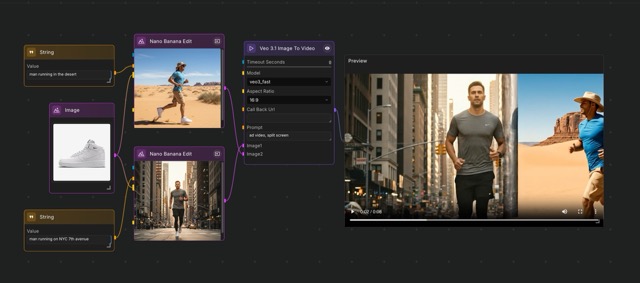

Open-Source Visual AI Workflow Builder

Connect nodes to generate content, analyze data, and automate tasks. Run models locally or via cloud APIs.

NodeTool is a visual workflow builder for AI pipelines—connect nodes for images, video, text, data, and automation. Run locally or deploy to RunPod, Cloud Run, or self-hosted servers.

Generate and transform media with AI.

Build agents, RAG systems, and pipelines.

Process documents and automate tasks without coding.

Build multi-step agents that plan and execute tasks.

Agent patterns → Realtime Agent →Index documents, search with AI, and answer questions from sources.

RAG pattern → Chat with Docs →Generate and transform media with AI models.

Movie Posters → Story to Video →Transform data, extract information, and automate reports.

Data Visualization → Data patterns →All workflows, assets, and models run on your machine for maximum privacy and control.

Mix local AI with cloud services for flexibility. Use the best tool for each task.

Build workflows by connecting nodes. No coding required. View the entire pipeline on one canvas.

See every step execute in real-time. Inspect intermediate outputs. Streaming execution shows progress as it happens.

Run LLMs, Whisper, and diffusion models on your infrastructure. Cloud APIs are opt-in.

NodeTool is open-source under AGPL-3.0. Join the Discord, explore the GitHub repo, and share workflows with other builders.